Article

Jan 29, 2026

We don’t often think about it explicitly, but most modern systems are governed by policy:

What an AI agent may or may not do.

What content is allowed or removed.

What behavior is escalated, blocked, or permitted.

And yet, in most organizations, policy is still treated as prose. It’s written in documents, then interpreted by people. It’s re-implemented by engineers and approximated by models.

This gap—between what we say and what our systems actually do—has quietly become one of the largest sources of risk in software.

Policy-as-Code is how we close this gap.

Aligning intent with outcome

If policy governs system behavior, it should be treated as a first-class engineering artifact.

Not guidance.

Not documentation.

But something that is:

Executable

Testable

Composable

Versioned

Auditable

This isn’t actually a new idea—the same idea has transformed how infrastructure is managed for the last decade.

What’s new is the application of this framework to norms and values.

Code is just formalization

To be clear, Policy as Code does not mean policy authors need to write software. It does not require policy teams to become engineers.

What matters is that policy is formalized: the questions that must be answered, the signals required to answer them, and the logic used to evaluate those signals.

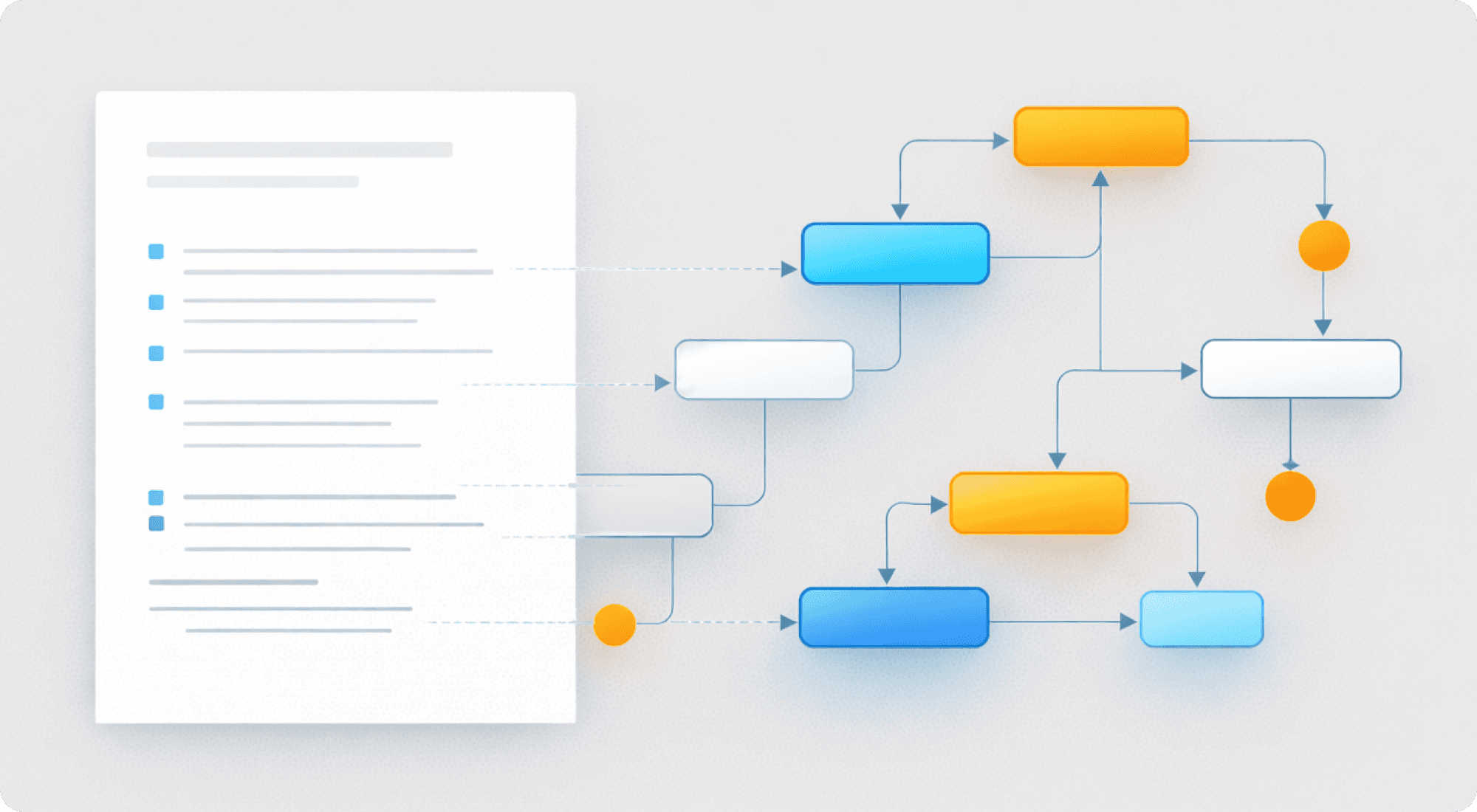

In practice, the source of truth is a decision structure—a tree (or DAG) that defines how intent becomes outcome. The only real requirement of Policy as Code is that policy is formalized enough to be represented in a structured syntax.

That same formalization can then be rendered in multiple ways: as code, as a clear visual diagram, or even projected back into prose.

The representation can change. The formalization is the key.

Why prose fails

Dijkstra famously wrote about the “foolishness of natural language programming,” pointing out that ambiguity, imprecision, and context-dependence are poorly suited to technical systems that demand clarity and precision.

Prose assumes slow, deliberative interpretation and debate. Moderation and AI governance require fast, repeatable execution at scale.

When decisions must be made at high volume and low latency, prose-based policy breaks down. Interpretation gives way to approximation.

This is true whether the executor is a model, a human reviewer, or a hybrid pipeline—and it has been true everywhere this has been tried at scale, including in my experience.

Unfortunately, natural language is ambiguous by design. It cannot be exhaustively tested. It invites interpretation drift.

When enforcement fails, organizations fall back on a familiar line:

“That’s not what we intended.”

But this is just another way of saying:

Our intent was never executable.

What Policy-as-Code actually changes

Policy as Code does not eliminate ambiguity. It forces you to account for it.

It does not remove judgment. It makes discretion explicit.

It draws clear boundaries between:

What must never happen

What may happen under defined conditions

What requires human or model judgment

And it makes those boundaries inspectable.

When policy is code:

Edge cases are modeled, not hand-waved

Changes can be tested before rollout

Failures can be traced to specific decisions

Norms gain a history

That’s not automation. That’s governance.

Two examples, one problem

Policy as Code isn’t about any one domain. It’s about a failure mode that appears whenever software is asked to enforce norms.

The control of AI agents and user-generated content may look unrelated at the interface level. In practice, they fail for the same reason: values live in documents, while behavior is determined elsewhere.

The examples below show what happens when policy is not executable—and why that problem repeats across systems.

A concrete example: AI Agents

Consider a common statement that you might find in a policy for an AI agent:

“The agent should not provide harmful instructions, and should refuse unsafe requests.”

This sounds responsible. It is also operationally meaningless.

What counts as harmful?

Where is the boundary between advice and instruction?

What should the agent do when context is unclear?

In practice, this policy is enforced indirectly—through prompt engineering, model tuning, and post-hoc filtering. The result is inconsistency, drift, and decisions that cannot be explained.

Now consider the same intent expressed as code:

Explicit categories of disallowed actions

Structured conditions under which actions are allowed

Required uncertainty thresholds for refusal or escalation

A defined path for human review when confidence is low

Nothing about this removes nuance.

It simply makes the tradeoffs explicit—and testable.

You can now ask:

Would the agent take this action?

Why did it refuse?

What changes if we relax this constraint?

Those questions are impossible to answer reliably when policy lives only in prose.

A concrete example: Content Safety

Shifting our focus to content safety, consider this fairly common framing you might find in a policy aimed at curtailing hate speech:

“Hate speech is prohibited, except in cases of condemnation, reporting, or academic discussion.”

Seems reasonable, right? But if we stop for a moment and inspect more carefully, we realize we're missing quite a bit of information:

What qualifies as condemnation?

How much context is required?

Who decides, and how consistently?

Experience has shown us what happens when you try to scale this policy. You end up with:

Over-enforcement by automation

Inconsistent human review decisions

Appeals that cannot be clearly explained

But if we express this policy as "code", it becomes:

A base prohibition on defined categories of hate speech

Explicit contextual exceptions with required signals

Confidence thresholds that trigger escalation rather than removal

A traceable decision path for explanation and appeal

To be clear, we haven't removed the need for judgment, but we have made those judgments explicit—and governable.

Why this matters now

The impact of ambiguous, black-box policy has been growing for years, but we’re at an inflection point due to a massive increase in scale (thanks AI), and a massive increase in the number of systems that need normative governance (again, thanks AI).

Today, governance affects:

AI behavior

Mass amounts of AI and user generated content

Public discourse

User trust

Regulatory exposure

Real-world harm

And governance is needed at ever-increasing scales.

As norms are increasingly enforced by machines, interpretation becomes a liability. Shouldn't we try to limit and mitigate that liability if we can?

Ultimately:

You can’t govern what you can’t inspect.

You can’t trust what you can’t explain.

You can’t improve what you can’t test.

Why we built Policy as Code

We built Policy as Code because we kept seeing the same failure mode:

Values are expressed in documentation, while system behavior is derived through interpretation.

Policy as Code is our answer to that gap.

It’s how we make norms executable, testable, and governable—without pretending that judgment or disagreement will ever disappear.

If values are going to be enforced at scale, by machines, in milliseconds, then they need rigor. They need an engineering discipline.

That discipline should be Policy-as-code.

Get to know Clavata.

Let’s chat.

See how we tailor trust and safety to your unique use cases. Let us show you a demo or email us your questions at hello@clavata.ai